Cats Effect 3, Blocking Work, and Clean Shutdowns

How I Learned to Stop Worrying and Love IO.blocking and the Case of the Never-Ending JVM

Cats Effect is the foundation for building safe, concurrent, and resource aware applications in the Scala ecosystem. It gives you tools to express side effects, run them predictably, and compose them in ways that avoid the pitfalls of traditional thread management. Instead of juggling futures and callbacks by hand, you describe programs as IO values and let the runtime schedule them efficiently. This model not only simplifies reasoning about concurrency, it also ensures that tasks do not leak resources or starve one another. In practice this means production code behaves more like the simple examples you sketch on the whiteboard, with the runtime handling the heavy lifting.

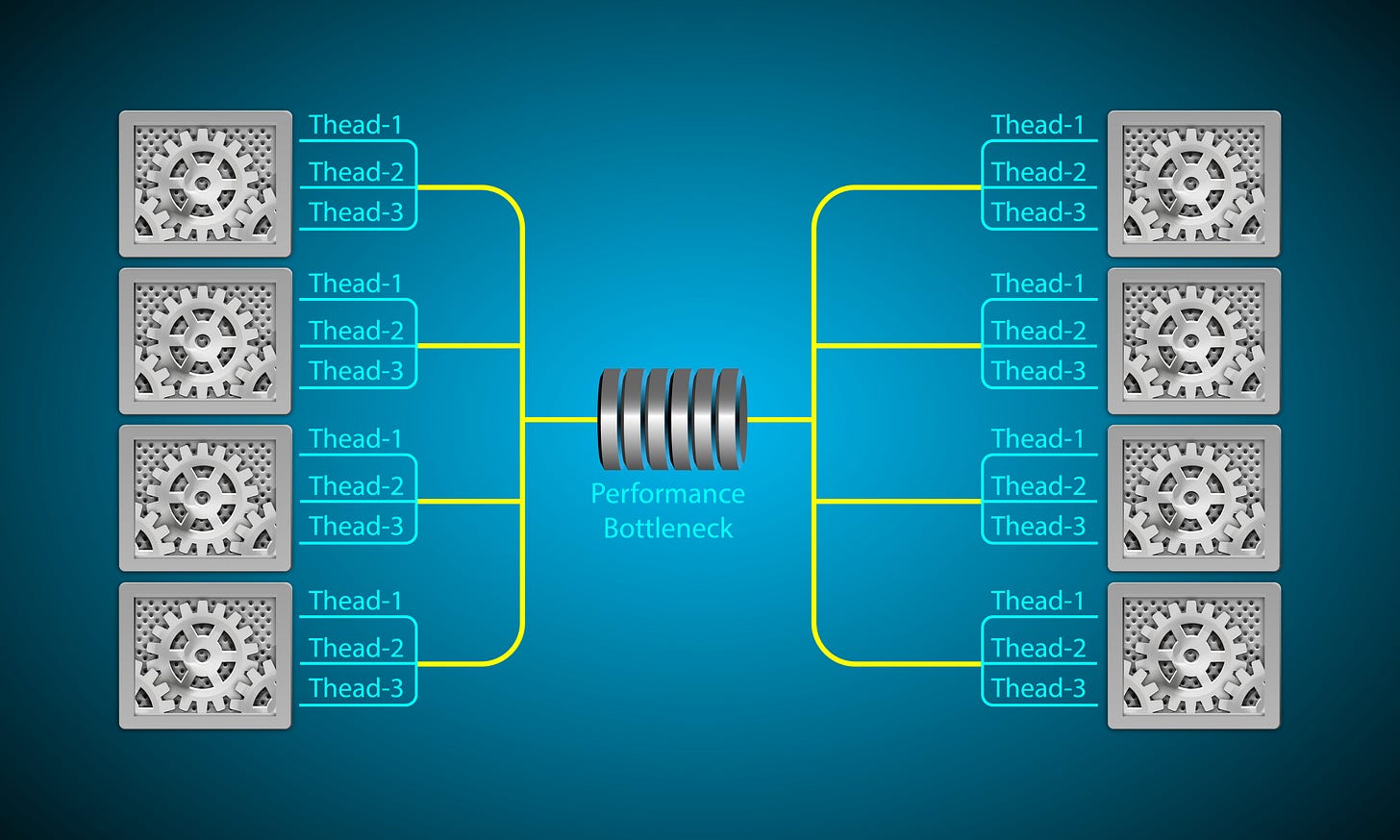

A key part of this runtime is how it manages threads. Cats Effect separates compute bound work from blocking calls so that long waits on I/O do not stall CPU heavy tasks. Ordinary IO runs on the compute pool, which is sized to the number of available processors or a custom configuration. When you call IO.blocking, the work is shifted to the blocking pool, which grows to handle operations like database queries or file system access. Scheduling is handled by a small timer backed by a ScheduledExecutorService, which allows features such as IO.sleep or timeouts. The runtime’s design keeps these pools coordinated and ensures that fibers move across them in predictable ways. This separation of duties is what makes programs written in Cats Effect resilient, responsive, and able to scale without the developer micromanaging threads.

Cats Effect 3 removed Blocker and simplified how you route work to the right threads. Along the way many developers hit two issues, how to replace blocker.blockOn and why the JVM sometimes refuses to exit with a message about non-daemon threads. This article distills a practical path through both. Code examples are available in my Github repo.

From Blocker to IO.blocking

In Cats Effect 2 you wrote blocker.blockOn(io) to move a task to a dedicated blocking pool. In Cats Effect 3 you use IO.blocking for synchronous blocking calls and you compose IO values in the usual way. Consider the following example. It shows a correct migration of a function that mixes regular effects with a blocking call.

import cats.effect.IO

def withBlocking: IO[Unit] =

for {

_ <- IO.println(”on compute pool”)

_ <- IO.blocking {

// real blocking call, e.g. JDBC, file I/O, legacy SDK

println(”on blocking pool”)

}

_ <- IO.println(”back on compute pool”)

} yield ()A common mistake is to place an IO inside IO.blocking and never run it. The argument to IO.blocking must be a synchronous side effect, not another IO. That is a subtle but very important point in Cats Effect 3. Let’s break it down carefully.

What IO.blocking is for

IO.blocking exists to tell the runtime that this synchronous call will block a thread, so please run it on the special blocking thread pool, not on the compute pool. The signature looks like this call def blocking[A](thunk: => A): IO[A]. Notice the argument: it is a by-name plain value of type A, not an IO[A]. This is intentional. It means you are supposed to put something like a JDBC call, a file read, or a Thread.sleep inside.

IO.blocking {

val rs = stmt.executeQuery(”SELECT * FROM users”)

// JDBC is synchronous and blocks a thread

rs.next()

}What happens if you pass IO instead

Suppose you try the following program.

IO.blocking {

IO.println(”hello”)

}Inside the blocking thunk you are building an IO, not running it. The result of the whole IO.blocking call is IO[IO[Unit]]. Unless you explicitly flatten it, you will never actually execute the inner IO. That’s why nothing prints in examples like this. Even if you flatten, you still miss the point: the runtime will only see a small synchronous computation (construct an IO object), which is not blocking. The actual blocking effect, if any is hidden inside the inner IO, and by the time it runs, it may be scheduled on the wrong pool.

Why the design is this way

Cats Effect separates the following concerns: IO.blocking only deals with raw synchronous side effects that would tie up a thread and IO composition (flatMap, for comprehensions) deals with sequencing already-built IO values.

If IO.blocking accepted IO[A], developers might wrap whole programs in it, which would “launder” normal effects onto the blocking pool unnecessarily. That would waste resources and break the balance between compute and blocking pools. By forcing you to pass a plain synchronous thunk, the library makes sure only real blocking code gets shifted.

The right pattern

If you already have an IO, just compose it the following way.

for {

_ <- IO.println(”on compute pool”)

_ <- IO.blocking {

// raw blocking code here

println(”this is on the blocking pool”)

}

_ <- IO.println(”back on compute pool”)

} yield ()

If you need to adapt a third-party API that is synchronous and blocking, wrap its direct calls in IO.blocking. If you already wrapped the calls in IO once, don’t put them inside IO.blocking again. The argument to IO.blocking must be a synchronous side effect because the runtime uses it to detect blocking operations and shift them to the right thread pool. Passing an IO inside only builds an effect value, it does not run it, and it hides the blocking behavior from the scheduler.